The YouTube algorithm’s bias toward extremist and sensationalist content has had real-world implications for democracy and public safety.

“Welcome to the latest medium for the American radical right — one more electronic venue that seems particularly suited for recruitment of the young,” Brentin Mock wrote of YouTube two years after it was launched.

Mock prophetically reported in the Southern Poverty Law Center’s “Intelligence Report” that “video-sharing websites like YouTube have become the hottest new venue for extremist propaganda and recruitment.”

Twelve years later, in March 2019, a self-described white supremacist named Brenton Tarrant entered a New Zealand mosque during Friday prayer and used a head-mounted camera to livestream himself opening fire on a crowd of worshippers, ultimately killing 51 people.

Amidst the rampage, Tarrant told viewers, “Remember lads, subscribe to PewDiePie.”

The comment was a reference to the online competition between two of YouTube’s most popular accounts, PewDiePie and T-Series, for the title of most-viewed channel.

Felix Kjellberg, AKA PewDiePie, tweeted that he was “absolutely sickened” to be associated with the massacre, and later posted a video asking his followers to stop engaging with the “subscribe to PewDiePie” meme.

The incident was a tragic illustration of the enduring convergence of fame, extremism and violence on YouTube, and inspired many leaders — including New Zealand Prime Minister Jacinda Ardern and French President Emmanuel Macron — to call on major technology companies to ramp up their efforts to combat violent extremism.

A 2006 article from The Independent described YouTube as "already a favourite neo-Nazi website."

As of mid-January of 2007, Mock reported, there were approximately 12,000 “white supremacist propaganda videos, hate rock concert highlight reels, and Holocaust denial pseudo-documentaries” openly available on video-sharing websites.

That was a time where this sort of content was still quantifiable. It is now so widespread and insidious that there’s no way of knowing exactly how much of it exists on the internet today.

Mock wrote that the availability of online video was not only making extremist recruitment less difficult, expensive, risky and time-consuming — it was also becoming easier to do anonymously.

“Video-sharing may be a particularly effective way for extremist groups, which have long sought ways to find new recruits, to connect with young people,” he wrote. “YouTube and its imitators are immensely popular among children, teenagers and young adults, and sometimes a single video will be downloaded literally millions of times.”

“The sheer volume of video files posted to the site each day makes it practically impossible to police all content.”

Additionally, the medium of video could easily be manipulated to create false or misleading images — such as by exploiting crafty camera angles to make the crowd at a neo-Nazi appear larger than it actually was. Today, editing software and deepfake technologies inspire growing concern about the veritability of online video.

“YouTube already bans ‘hate speech’... but the sheer volume of video files posted to the site each day makes it practically impossible to police all content,” Mock wrote in 2007. “As a result, particular videos are normally only removed as a result of a user complaint.”

Critics have long called for YouTube to do a better job of policing supremacist and extremist content. But the company has not yet found a sufficiently viable solution, even more than a decade since Mock’s article was published.

"Given its billion or so users, YouTube may be one of the most powerful radicalising instruments of the 21st century,” wrote techno-sociologist Zeynep Tufekci in an op-ed for The New York Times in 2018.

John Herrman of the New York Times Magazine described the YouTube right as a dominant native political community composed of “monologists, essayists, performers and vloggers who publish frequent dispatches from their living rooms, their studios or the field, inveighing vigorously against the political left and mocking the ‘mainstream media,’ against which they are defined and empowered.”

Some are independent creators, while others are associated with larger media companies, like Rebel Media or the now-deplatformed InfoWars.

Herrman observed that this community draws in young people by adopting tactics similar to those used by conservative talk radio pundits such as Rush Limbaugh to appeal to previous generations.

“Unpopular arguments can benefit from being portrayed as forbidden, and marginal ideas are more effectively sold as hidden ones,” he wrote. “The zealous defence of ideas for which audiences believe they’re seen as stupid, cruel or racist is made possible with simple inversion: Actually, it’s everyone else who is stupid, cruel or racist, and their ‘consensus’ is a conspiracy intended to conceal the unspoken feelings of a silent majority.”

The most toxic parts of the internet have grown out of a digital culture of trolling that at one time seemed mischievous, but mostly innocuous. In recent years, however, hateful memes and social media provocateurs have made their way into mainstream culture and politics.

Whitney Phillips, an assistant professor of communication at Syracuse University who studies the effects of online trolling on mainstream culture, told NBC News that early media manipulation strategies established a “behavioural blueprint” that was later more dangerously exploited.

“[In recent years,] millions of dollars [have been] poured into propping up meme-based political content and advertisements, both from US political campaigns and lobbying organisations as well as shadowy foreign influence campaigns seeking to sow division and amp up racist rhetoric,” wrote Brandy Zadrozny and Ben Collins of NBC News.

The key to spreading these ideas is cross-pollination between social media platforms.

A 2018 census of alt-right Twitter accounts by VOX-Pol — a European academic research network focusing on violent online political extremism — found YouTube was the most-shared domain by a significant margin.

The most popular YouTube links included videos from alt-right personalities like Stefan Molyneux and Lauren Southern, as well as white nationalist channel Red Ice TV.

One in five fascist activists analysed by Robert Evans in a Bellingcat report last year credited YouTube with their “red-pilling” — an internet slang term used to describe the conversion to far-right beliefs. The video platform appeared to be the single most frequently discussed website among the community.

“Fascists who become red-pilled through YouTube often start with comparatively less extreme right-wing personalities, like Ben Shapiro or Milo Yiannopoulos,” Evans found.

“[YouTube] provides a pathway for those interested in the American fascist movement.”

A large-scale audit of user radicalisation on YouTube published last month by researchers at Cornell University affirmed this observation. After processing nearly 80 million comments on videos from 360 channels, they found evidence that viewing videos from “alt-lite” and “Intellectual Dark Web” creators often served as a gateway to the consumption of “alt-right” content. The three communities increasingly share the same user base, which consistently migrates toward more extreme content.

“[YouTube] provides a pathway for those interested in the American fascist movement,” Evans wrote.

This is true in other places, too.

A recent New York Times investigation found that “time and again, videos promoted by the site have upended central elements of daily life [in Brazil].”

YouTube’s powerful recommendation system has helped members of the country’s newly empowered far right — both grassroots organisers and federal lawmakers — to rise to power, and provided a platform for them to harass and threaten their political enemies.

These YouTube-bred politicians “[govern] the world’s fourth-largest democracy through internet-honed trolling and provocation.”

Now-president Jair Bolsonaro was an early adopter, and used his channel to share hoaxes and conspiracies. He began to gain notoriety as Brazil’s political system collapsed — the same time that YouTube’s popularity in the country soared. The far-right community saw massive growth on the site, helping Bolsonaro gain support “at a time when his country was ripe for a political shift.”

Subscription numbers for alt-right Brazilian YouTubers soared following the 2018 election of Bolsonaro.

Alt-right Brazilian YouTubers saw a jump in subscriptions following the 2018 election

In addition to serving as a platform for their ideas to gain momentum, YouTube’s algorithms have been biased towards their spread.

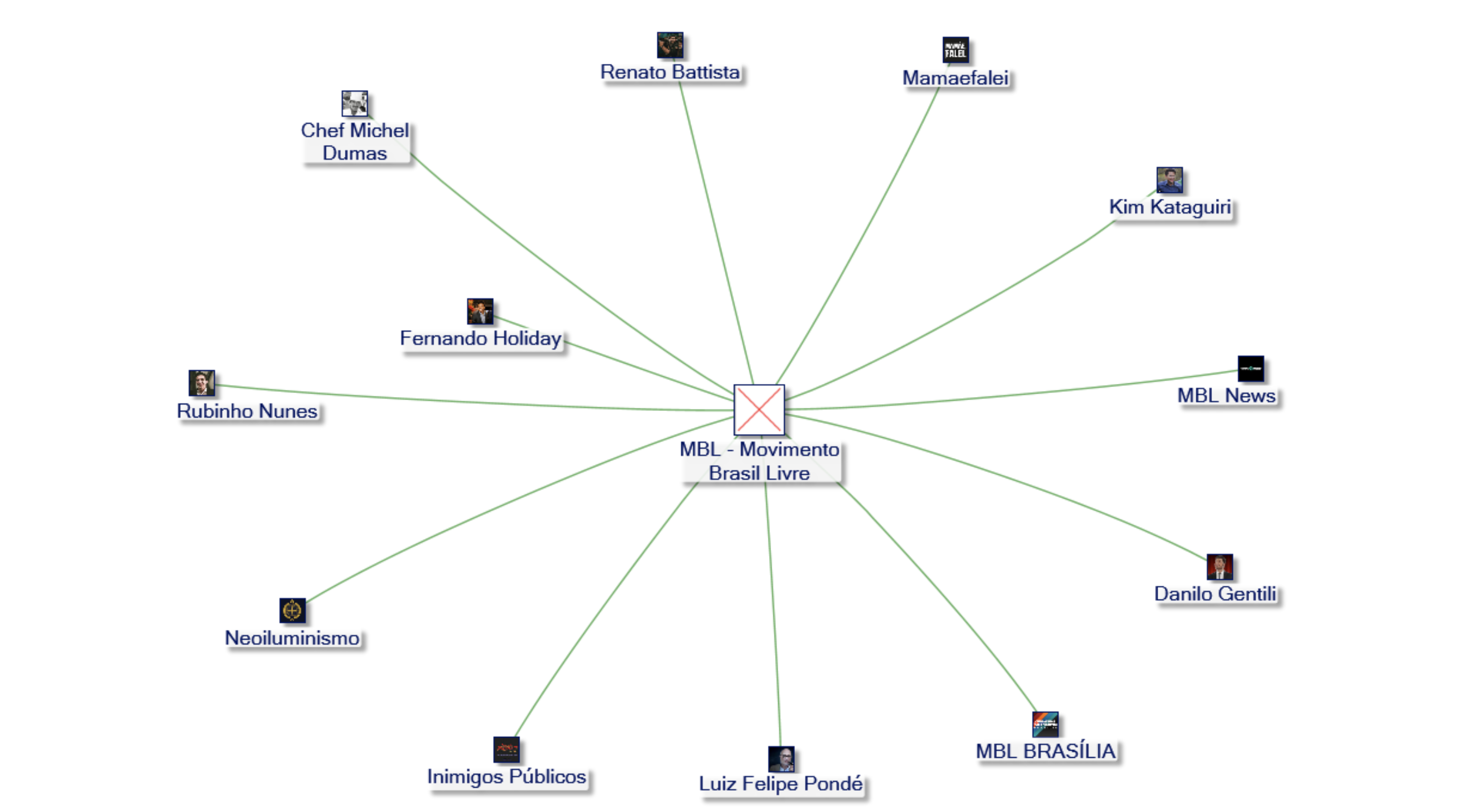

Movimento Brasil Livre (MBL) — the Brazilian equivalent of the Tea Party Movement — jumped from 160,000 to 1,300,000 subscribers during 2018, and earned about 40 percent of its funding from YouTube ad revenue. The channel posts videos about controversial or trending topics, some of which have even been shared by Paul Joseph Watson and Milo Yiannopoulos.

The party was started by 23-year old Kim Kataguiri, who in 2018 become the youngest person ever elected to Congress in Brazil thanks to his strategic use of YouTube, WhatsApp memes and fake news.

Several YouTube provocateurs are now serving in the highest levels of government.

“I guarantee YouTubers in Brazil are more influential than politicians,” Arthur Mamãe Falei, another MBL-affiliated YouTuber and newly elected state representative, told BuzzFeed News.

Network graph of channels related to Movimento Brasil Livre, a Brazilian conservative political party. Compiled using NodeXL Pro.

MBL has had to choose when it is and is not advantageous to align with Bolsonaro. Their viewership and subscriptions began to decline around May 2019, after they decided they not to endorse a demonstration in support of the president.

The party was accused of developing a “sick jealousy” and conspiring against Bolsonaro’s government. #MBLTraidoresdapatria — or “MBL national traitors” — trended on Twitter.

The drive toward extremist content on Brazilian YouTube has extended to health-related and other educational searches, as well.

Parents seeking health information about diseases such as Zika are met with dangerous disinformation about vaccines. Students are disrupting learning environments by quoting conspiracy theories and secretly recording their instructors.

“Social media is winning,” said Dr. Auriene Oliviera, who has had many patients decline necessary larvicide treatment citing false claims they had learned on YouTube.

Danah Boyd, the founder Data & Society, considers the YouTube’s impact in Brazil to be a consequence of its “unrelenting push for viewer engagement, and the revenues it generates” as well as a worrying indication of the platform’s growing influence on democracies around the world.

After analysing transcripts and comments from 1000s of Brazilian political videos, a team of researchers at the Federal University of Minas Gerais found that right-wing channels saw far faster audience growth than others.

Using a technique similar to Guillaume Chaslot’s AlgoTransparency tool, the team found that “after users watched a video about politics or even entertainment, YouTube’s recommendations often favoured right-wing, conspiracy-filled channels.”

“However implausible any individual rumour might be on its own, joined together, they created the impression that dozens of disparate sources were revealing the same terrifying truth.”

Once as user is exposed to this sort of content, and it is noted by the algorithm, they are likely to be recommended more of it. Users might think they are reaching conclusions on their own, when in reality the connections are being made on their behalf by the system.

“As far-right and conspiracy channels began citing one another, YouTube’s recommendation system learned to string their videos together,” wrote The Times’ Max Fisher and Amanda Taub. “However implausible any individual rumour might be on its own, joined together, they created the impression that dozens of disparate sources were revealing the same terrifying truth.”

By doing this, the researchers concluded, YouTube had united and built an audience for once-marginal channels.

Although YouTube representatives disputed claims that the system privileges a particular viewpoints or directs users toward extremism, they did concede some of the findings and promised to make changes.

However, despite saying its internal data contradicted the researchers’ findings, YouTube “declined the Times’ requests for that data, as well as requests for certain statistics that would reveal whether or not the researchers’ findings were accurate.”

Despite YouTube’s global reach, the company’s efforts at curbing the spread of disinformation and radicalising content have largely prioritised English-language content.

As the Brazilian examples show, this is clearly not enough. It is dangerous that a company that has such significant influence in the global information marketplace is so unprepared to meaningfully address the consequences of its design decisions.

“It’s incredibly difficult to study the technical aspects of YouTube systematically from the outside because their systems are so opaque,” said Becca Lewis of Stanford University. “A lot of the changes that they make, they’re asking us to trust that ‘Oh, this is going to make an impact.’”

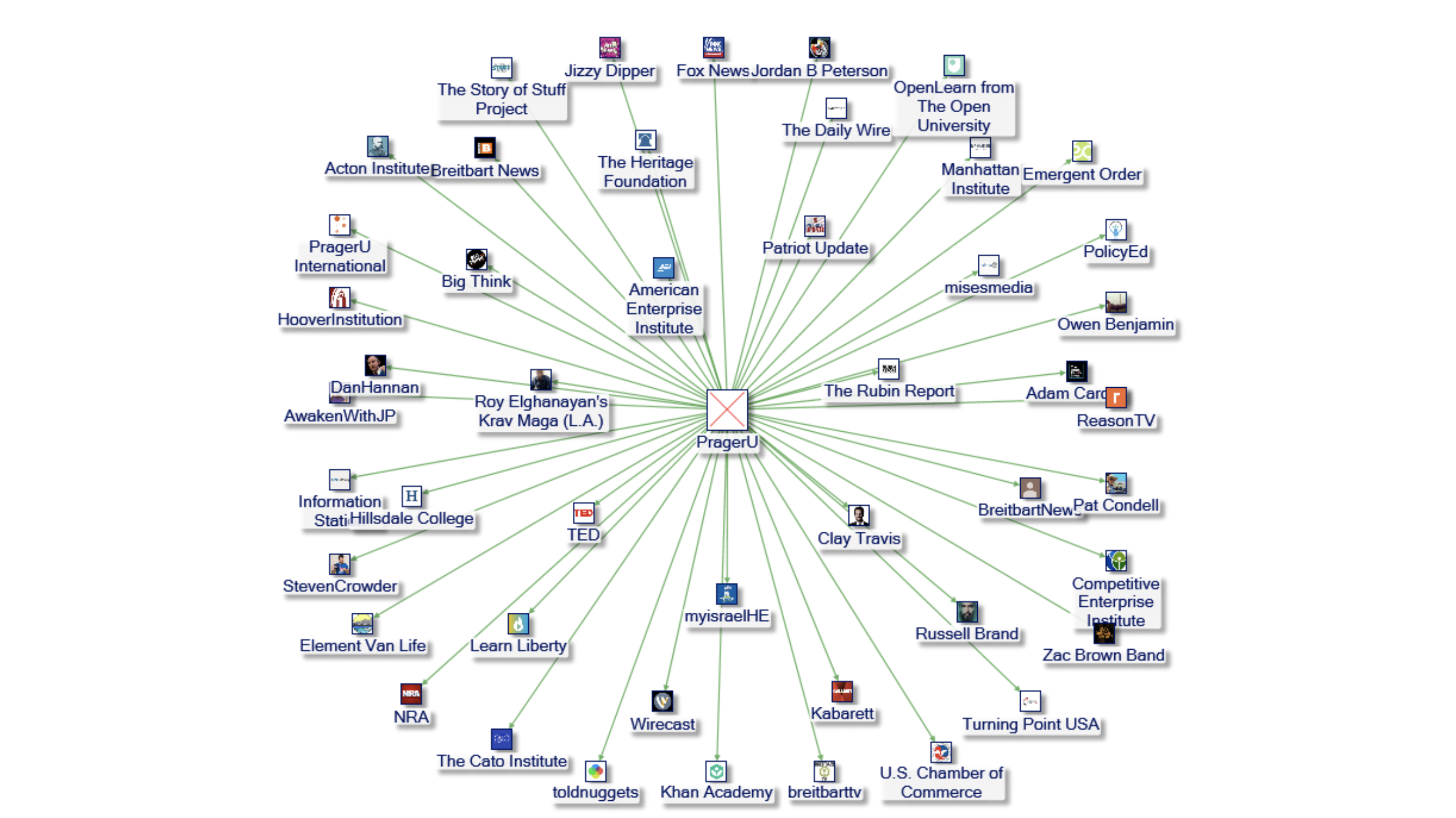

For years, researchers like Lewis have been raising alarms about the dangerous, if unintentional, consequences of YouTube’s engagement-driven recommendation algorithm. Last September she published a report for Data & Society entitled “Alternative Influence: Broadcasting the Reactionary Right on YouTube”.

In the report, she defined a group she called the Alternative Influence Network (AIN) as “an assortment of scholars, media pundits, and internet celebrities [who] are using YouTube to promote a range of political positions, from mainstream versions of libertarianism and conservatism, all the way to overt white nationalism.”

These creators are trying to capitalise on a changing media environment by providing an alternative source for news and political commentary.

YouTube provides financial incentives for individuals to broadcast and build their audiences through its Partner Programme, which is open to content creators who have received 4,000 “watch hours” over the course of a year and have at least 1,000 subscribers. Content creators often also relay their popularity on YouTube into other financial opportunities, such as Patreon donations or merchandise sales.

“One reason YouTube is so effective for circulating political ideas is because it is often ignored or underestimated in discourse on the rise of disinformation and far-right movements,” Lewis wrote.

Lewis compiled data for 65 influencers across 81 channels to create an illustrative, though not comprehensive, snapshot of the Alternative Influence Network.

Viewership trends over time for 10 of the most-viewed channels in the network.

Members of the network — not all of whom align with the “alt-right” — comment on each others’ videos, reference each others’ ideas and interact on other platforms like Twitter and Instagram. These social ties, in partnership with algorithmic pattern identification, work to the advantage of trust-building.

“By connecting to and interacting with one another through YouTube videos, influencers with mainstream audiences lend their credibility to openly white nationalist and other extremist content creators,” Lewis wrote.

Network graph of channels related to PragerU — an unaccredited conservative online “university”. Compiled using NodeXL Pro.

AIN influencers try to court young audiences drawn to rebellion by presenting themselves as social underdogs and members of a hip counterculture.

“The entire countercultural positioning is misleading: these influencers are adopting identity signals affiliated with previous countercultures, but the actual content of their arguments seeks to reinforce dominant cultural racial and gendered hierarchies,” Lewis wrote. “Their reactionary politics and connections to traditional modes of power show that what they are most often fighting for is actually the status quo.”

In many ways, Lewis wrote, YouTube is built to incentivise the behaviour of extremist political influencers.

Right-wing YouTuber Paul Joseph Watson poses with the plaque YouTube sent to congratulate him for surpassing 1 million subscribers.

“The platforms didn’t create propaganda, they didn’t create racism, they didn’t create sexism.”

“In a media environment consisting of networked influencers, YouTube must respond with policies that account for influence and amplification, as well as social networks,” she said.

Lewis believes radicalisation and extremism on YouTube are fundamentally the result of larger societal problems.

“The platforms didn’t create propaganda, they didn’t create racism, they didn’t create sexism,” she told me. “I think there’s a meaningful argument to be made that they are exacerbating these problems and amplifying them in many ways… they work in concert with other media structures and institutions.”

When Mock first began to observe the trend of white supremacist content on YouTube, the messaging was more obvious and overt. Today, it can be hard to know you’re encroaching radical territory until it’s too late.

You might start by watching a regular news clip, or click on a seemingly unassuming explainer video from PragerU, and be gradually guided down a path of propagandist content.

Lewis thinks it’s important to pay attention to the way in which extremist discourse is becoming normalised in the mainstream.

“I think that sometimes this focus on radicalisation can end up reinforcing the idea that these far-right ideas are fringe,” she said, “when actually part of the reason that they’ve become such a powerful force online and in the real world is that you have Donald Trump retweeting far-right memes coming from 4chan and you have the centres of power in the US amplifying reinforcing this stuff.”

READ MORE:

How YouTube incentivises the creation and spread of conspiracy content

"One of the most powerful radicalising instruments of the 21st century"