Vulnerabilities in YouTube’s architecture can pose a serious risk for the state of public knowledge.

In 2018, Michael Golebiewski of Microsoft Bing coined the term “data voids”, used to define “search terms for which the available data is limited, non-existent, or deeply problematic.”

Malicious actors can take advantage of such voids by flooding search results with disinformation or conspiracy content intended to advance a certain agenda.

For example, in a major breaking news scenario, they might post misleading or reactionary content before authoritative sources have had the chance to publish official details, thereby influencing the narrative for anyone searching for information on the topic.

"[A]dversarial actors try to jump on that news story in order to shape the landscape, both in text and in video," said Danah Boyd, the founder and president of Data & Society.

This happened following a mass shooting at a church in Sutherland Springs, Texas, in 2017.

Malicious actors who are intent on pushing a certain agenda understand how to produce connections between videos

“As journalists were scrambling to understand what was happening,” Boyd said, “far-right groups, including self-avowed white nationalists, were coordinating online to shape Twitter, Reddit and YouTube to convey the message that the Sutherland Springs shooter was actually a member of Antifa, a group that they actively tried to pump up as a serious threat in order to leverage the news media’s tendency for equivalency,.”

These tactics are particularly effective on YouTube because its news ecosystem does not move as quickly, and most major authoritative sources don’t specifically optimise for the platform. Conversely, malicious actors who are intent on pushing a certain agenda understand how to produce connections between videos — such as CDC content and anti-vaxxing messages — by commenting, sharing links, and watching videos in a certain order so that the algorithm identifies those associations as a trend.

Content creators might also try to mislead people with short attention spans for news by posting sensationalist and clickbait-y headlines and thumbnails.

Former YouTube employee Guillaume Chaslot created a recommendation explorer that extracts the most frequently recommended videos about a query. He found that searches related to common conspiracies yielded far more misleading content on YouTube than from Google — particularly from the site’s recommendation engine.

Additionally, YouTube takes a long time to review and take down videos, often allowing people to get away with propagating disreputable content.

“[B]y uploading defamatory content on YouTube before a critical period, and removing the videos afterwards, content creators can effectively promote defamatory videos without liability,” Chaslot wrote in a post for Medium.

This is especially concerning given the immense amount of people around the world who turn to YouTube as a primary source of information.

“[I]t increasingly feels like YouTube, like Google, is being harnessed as a powerful agenda booster for certain political voices,” wrote Jonathan Albright, the director of the Digital Forensics Initiative at Columbia University’s Tow Center for Digital Journalism.

Malicious actors work to bring fringe ideas into the mainstream by creating and spreading content that integrates the use of strategic terms, such as “crisis actor” or “deep state”.

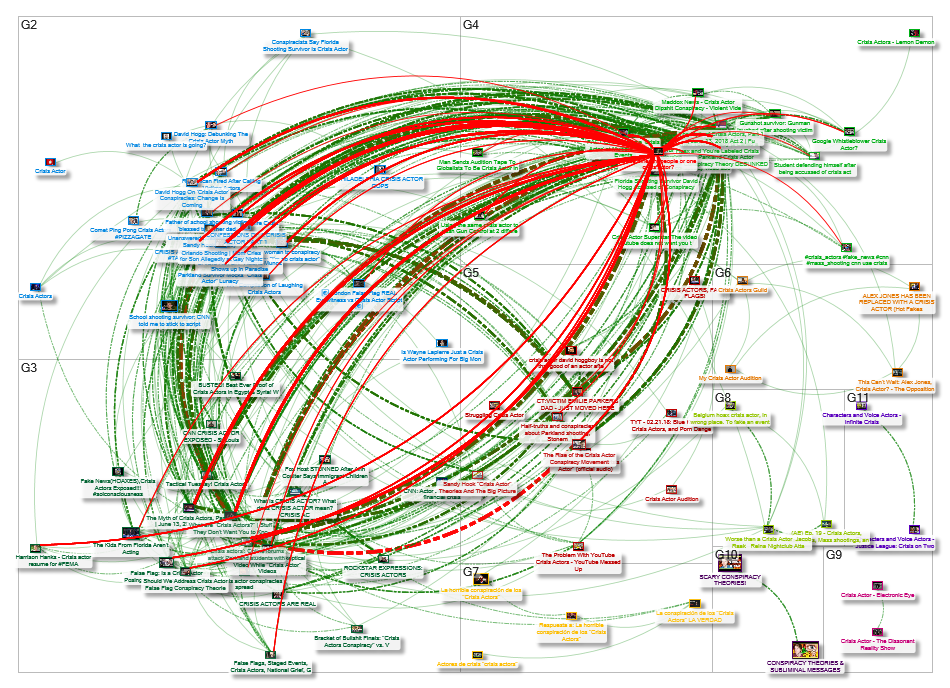

A network map of 150 videos related to the query “crisis actor”, creating using Node XL Pro. The second most most-linked in the network, after a news piece from the Washington Post, is a conspiracy video entitled “Three different people or one crisis actor?” by MrSonicAdvance. Click here for an interactive version.

“They produce YouTube videos,” Boyd said. “They seed the term throughout Wikipedia. They talk about the term on Twitter. They try to get the term everywhere, to shape the entire ecosystem. And then they target journalists, baiting them into using the phrase as well. This is how extremist groups take conspiratorial logics to the mainstream."

Once those phrases infiltrate mainstream news coverage, it increases their popularity in search results, thereby giving extremist or white supremacist ideas a semblance of greater legitimacy.

In the weeks following the 2018 shooting in Stoneman Douglas High School in Parkland, Florida, Albright conducted a landscape survey of the network of videos users were being exposed to when searching for the phrase “crisis actor”.

He found that 90 percent of the video titles were “a mixture of shocking, vile and promotional”, including themes like “rape game jokes, shock reality social experiments, celebrity pedophilia, ‘false flag’ rants, and terror-related conspiracy theories dating back to the Oklahoma City attack in 1995.”

“Every religion, government branch, geopolitical flashpoint issue, shadow organisation — and every mass shooting and domestic terror event — are seemingly represented in this focused collection.”

Collectively, the 8842 videos in the network had registered nearly 4 billion views, and exemplified the convoluted web of conspiracy content available on YouTube.

“Every religion, government branch, geopolitical flashpoint issue, shadow organisation — and every mass shooting and domestic terror event — are seemingly represented in this focused collection,” Albright wrote.

Alex Jones, who runs the far-right fake news website InfoWars, was among the most prominent champions of the “crisis actor” conspiracy. His video about a Parkland student — “David Hogg Can’t Remember His Lines in TV Interview” — was removed for violating guidelines against bullying.

CNN reported in March 2018 that ads for major companies and organisations — such as Nike, 20th Century Fox, the Mormon Church, Alibaba and the National Rifle Association — were being played before InfoWars conspiracy videos, thereby allowing them to profit from his dangerous misinformation campaigns.

“Even an ad for USA for UNHCR, a group that supports the [United Nations High Commissioner for Refugees], asking for donations for Rohingya refugees was shown on an InfoWars YouTube channel,” CNN found.

Many of the brands suspended their ads on the channel after being notified by CNN, saying they were unaware they were running on Jones’ channel.

Later that year, Jones and InfoWars were banned by Facebook, Apple, YouTube and Spotify for violating the platforms’ terms of service and community guidelines. Prior to being taken down by YouTube, InfoWars and its associated channels had amassed 2.4 million subscriptions.

Despite being deplatformed, InfoWars continues to post new content on its website.

Conspiracy theories are enticing because they provide ready-made explanations for complex issues and news events.

“Conspiracy stories hit our emotional fight or flight triggers. And the stories rest on the unstated premise that knowledge of the conspiracy will protect you from being manipulated,” said Robert J. Blaskiewicz Jr., a columnist for the Committee for Skeptical Inquiry, a non-profit educational organisation that applies scientific analysis to conspiracy theory claims. “This in itself compels people to watch and absorb as much as they can and to revisit videos."

Due to the cyclical supply-and-demand nature of the platform, if the recommendation engine is promoting conspiracy videos, creators are incentivised to make more of them.

The genre has become so thoroughly enmeshed with YouTube’s video culture that reeling it in necessitates a monumental undertaking.

“Every time there’s a mass shooting or terror event, due to the subsequent backlash, this YouTube conspiracy genre grows in size and economic value,” Albright said. “The search and recommendation algorithms will naturally ensure these videos are connected and thus have more reach.”

This type of content gets recommended by the algorithm because those videos tend to draw in lengthy engagement from a significant amount of viewers. Due to the cyclical supply-and-demand nature of the platform, if the recommendation engine is promoting conspiracy videos, creators are incentivised to make more of them.

Zoë Glatt, a PhD researcher at the London School of Economics, said many YouTubers are greatly concerned about creating content that will be received well by the algorithm and continue to draw views and generate revenue.

“People replicate the content that is trending,” Glatt said. “...[The logic is like,] ‘What’s happening at the moment, what are people searching for, how can I replicate that thing maybe in my own way to make it funny but also to jump on the fact that people are searching this at the moment?’”

Influencers who build trust and intimate relationships with their audiences can amplify fringe ideas and bring them into the mainstream. Even if they don’t embrace the theories fully, simply entertaining them or framing them as open-ended questions could seed doubts among viewers.

Some of the most popular creators on the platform are already doing this. Logan Paul released a 50-minute satirical flat-earth documentary. Shane Dawson posted a sponsored, multi-part “Conspiracy Series” that, among other things, questioned the veracity of 9/11.

“How do you grapple with these things when the most popular creators on your platform are producing some of this content, not just the fringes that rely on the recommendation algorithm?” said Becca Lewis, a Stanford University researcher and author of a report about YouTube’s “Alternative Influence Network”.

This ever-expanding pool of socially harmful content makes it increasingly difficult to counter misinformation claims with authoritative fact-checking, and turns content moderation into a game of whack-a-mole.

Albright told BuzzFeed News that YouTube is “algorithmically and financially incentivising the creation of this type of content at the expense of truth.”

In addition to deceitful content uploaded by creators, YouTube also plays host to a great deal of AI-generated videos that seemingly look to exploit the SEO surrounding popular topics and current events. Such channels pump out thousands of videos whose titles are stuffed with references to politics and current affairs.

Albright found a collection of 19 channels that had generated more than 78,000 videos using an “advanced artificial intelligence technology” called T. New content was uploaded every three to four minutes, and consisted of a computerised voice reading excerpts from published news articles against a photo slideshow.

An example of a video generated by “T”. Most of the channels in this network were disabled following Albright’s report.

“Everything about [the] videos suggests SEO, social politics amplification, and YouTube AI-playlist placement," Albright wrote. They served to boost the overall relevance of the videos and associated channels.

These kinds of AI-generated videos are being posted faster than they can be identified, and even while some are taken down, others continue to crop up in their place.

Susceptibility to exploitation by malicious actors is not a direct fault of YouTube, but rather an outcome of its reliance upon a data-driven system. Once vulnerabilities are found, however, they should be monitored and addressed appropriately.

YouTube often lags behind other major platforms when it comes to reining in harmful disinformation content

Nevertheless, YouTube often lags behind other major platforms when it comes to reining in harmful disinformation content.

Most recently, the company disabled 210 channels that were spreading disinformation about the ongoing protests in Hong Kong, several days after similar actions by Facebook and Twitter. YouTube did not directly address the delayed response, nor did it provide examples of the removed content, as the other platforms did.

According to MSN, several removed videos accused exiled Chinese billionaire Guo Wengui — who has used social media to accuse leaders of the Chinese Communist Party of corruption — of being a fraud,.

Despite taking down those videos, however, YouTube said it will continue to allow the Chinese government to post and advertise on the platform.

“Even though the major Western social platforms are blocked within China, Chinese state news outlets have spent hundreds of thousands of dollars to build their presence on YouTube, Facebook, Twitter and other sites, government procurement records indicate,” MSN reported.

Though YouTube does have a system for identifying and labeling content that has been sponsored by governments, its application has been inconsistent.

In early 2018, US Senate began questioning Google executives about the company’s possible role in Russian interference in the 2016 election

YouTube CEO Susan Wojcicki told Wired that the year and a half after Donald Trump’s election had taught her “how important it is for us … to be able to deliver the right information to people at the right time. “

Ahead of the EU elections this May, YouTube launched “Top News” and “Breaking News” carousels — which had been previously implemented in the US — on European editions of the site, in an effort to make authoritative news sources more prominent.

In August, YouTube also confirmed it is in the process of “expanding an experimental tweak to its recommendation engine that’s intended to reduce the amplification of conspiracy theories to the UK market,” following similar changes introduced in the US in January.

Much remains to be done in the way of cracking down on misinformation content in the rest of the world.

“We are constantly undertaking efforts to deal with misinformation [on YouTube],” said Google CEO Sundar Pichai while testifying before Congress in December 2018. “We have clearly stated policies and we have made lots of progress in many of the areas where over the past year. … We are looking to do more.”

Although Wojcicki asserted in a recent press release that “Problematic content represents a fraction of one percent of the content on YouTube,” she acknowledged “...This very small amount has a hugely outsized impact, both in the potential harm for our users, as well as the loss of faith in the open model that has enabled the rise of your creative community.”

Researchers like Becca Lewis of Stanford University were critical of Wojcicki’s press release. She also pointed out that YouTube executives have been “extremely opaque” in their decision-making.

She did not detail that impact and harm, however, or explain how much viewership the offensive content draws. Given the sheer magnitude of content being published and shared on YouTube, even a fraction of a percent could amount to millions of videos.

“From my experience, in the disinformation space, all roads seem to eventually lead to YouTube,” Albright wrote. “This exacerbates all of the other problems, because it allows content creators to monetise potentially harmful material while benefiting from the visibility provided by what’s arguably the best recommendation system in the world.”

He argued the platform should implement policies that include optional filters and human moderators to help protect children and other vulnerable users from this material.

A YouTube spokeswoman told TechCrunch the company acknowledges it needs to reform a recommendation system that has consistently proven to abet the spread of harmful clickbait and misinformation into the mainstream.

The company will focus on reducing the recommendation of “borderline” content that toes the line of acceptable content policies and, in Chaslot’s words, “perverts civic discussion.”

The spokeswoman also said that a US test of a reduction in conspiracy junk recommendations led to a 50 percent drop in the number of views from recommendations. This suggests the amplification of this content could be further curbed if the company were to more aggressively demote it.

Some critics, like Slate technology writer April Glaser, are sceptical these actions will be sufficient.

“YouTube may have to do more than enforce community policies to stop the long-standing scourge of conspiracy theories on YouTube,” she argued. “It [may] have to begin making some real editorial decisions.”

READ MORE:

How YouTube incentivises the creation and spread of conspiracy content

"One of the most powerful radicalising instruments of the 21st century"