YouTube’s recommendation algorithm drives more than 700 million hours hours of watchtime per day, but no one—including its creators—fully understands how it works.

For much of the past decade, Guillaume Chaslot has been trying to draw attention to a problem he helped create.

A former YouTube employee who worked on artificial intelligence for YouTube’s “recommended for you” feature in 2010-11, Chaslot now devotes his time to exposing the vulnerabilities and potential for exploitation that result from the algorithmic prioritisation of engagement—time online and clicks—on the platform.

While still at the company, Chaslot had started working on a project to introduce diversity into the platform’s recommendations. The project was shut down because it did not do as well for watchtime, he told NBC News.

He maintains that he was fired in 2013 for asking too many questions about how the company managed the algorithm.

Chaslot now works as an advisor for Center for Humane Technology, and is the creator of a tool called AlgoTransparency, which aims to illustrate the types of content the YouTube algorithm is directing users toward.

YouTube’s recommendation engine has a tendency to send users down a “rabbit hole” of deceptive or extremist content

Recent years have seen a mounting evidence that YouTube’s recommendation engine has a tendency to send users down a “rabbit hole” of deceptive or extremist content. Problems like this are a consequence of the fact that engineers often design for how they hope a product will be used — they don't always consider the real and dangerous ways in which they might be exploited for personal or organisational gain, profit or politics.

What happens when a system that is used daily by billions of people is built to prioritise watchtime and profitability over everything else? More than affecting the user experience, this can result in wide-reaching implications for the state of public information and civil discourse.

Unlike social media platforms like Facebook and Twitter, where you’re mostly served content from the accounts you already follow, YouTube is designed to keep users engaged by pointing them toward content they’re likely to be interested in, but might not otherwise seek out themselves. To do so, the company has designed algorithms that weigh users’ watch history, keywords and engagement—among other undisclosed data points—to find and recommend other videos they might enjoy.

"The designers of these systems don’t pay attention to the content itself, but instead pay attention to a set of signals, trying to determine what makes something relevant to one person or another," said Danah Boyd, founder and president of Data & Society, during a talk at the Knight Media Forum.

The recommendation algorithm is the single biggest decider of what people watch on YouTube, directing more than 700,000,000 hours of watch time each day on a platform that boasts two billion monthly active users, and counting.

Eighty-one percent of U.S. adults who use YouTube say they at least occasionally watch videos suggested by the recommendation algorithm, according to a 2018 survey by the Pew Research Center. Younger users are especially likely to do so regularly.

“Our job is to give the [user] a steady stream [of content], almost a synthetic or personalised channel,” YouTube’s Chief Product Officer Neal Mohan said at the International Consumer Electronics Show in January.

While this sort of personalisation can be helpful for guiding users to the most relevant search results, it can also have disastrous consequences, like recommending videos of children to paedophiles—who are, after all, the people most likely to engage with that sort of content.

When coupled with a mandate to keep users engaged for as long as possible, algorithmic customisation might derail innocuous news-related searches into a conspiracy-laden maelstrom of content claiming that mass shooting survivors are “crisis actors”, or that the Earth is flat, or that aliens exist underneath Antarctica.

“The whole point of the algorithm is to keep people on the platform,” said Zoë Glatt, a PhD researcher at the London School of Economics who is conducting ethnographic research with YouTube content creators. “...And of course, that has resulted in certain types of content being promoted—any type of content being promoted—that will keep people watching.”

The recommendation engine impacts both users and creators by pushing discourse in a certain direction, thereby increasing demand for that sort of content — resulting in a cycle that is hard to break.

“We don’t have to think as much. We’ll just give it some raw data and let it figure it out."

What’s worse, no one—including the programmers who developed it—fully knows how it works.

The company has invested a great deal into developing machine learning technology—which employs trial and error methods combined with statistical analysis—that analyses users’ tastes and decision-making processes to determine what videos to suggest “up next” or on autoplay.

In 2016, YouTube began employing an artificial intelligence technique called a deep neural network, which can recognise hidden patterns and make connections between videos that humans would not be capable of, based on hundreds of signals largely informed by a user’s watch history.

“We don’t have to think as much,” YouTube recommendations engineer Jim McFadden told the Wall Street Journal. “We’ll just give it some raw data and let it figure it out.”

Details about the algorithm—which is constantly changing—are kept secret, as is aggregate data revealing which videos are heavily promoted by the algorithm, or what proportion of individual views are received from “up next” suggestions (as opposed to organic searches).

The lack of transparency regarding the intricacies of a system is responsible for more than 70 percent of viewing time on the platform is troubling.

“By keeping the algorithm and its results under wraps, YouTube ensures that any patterns that indicate unintended biases or distortions associated with its algorithm are concealed from public view,” Paul Lewis and Erin McCormick wrote in the Guardian.

“The ease with which a person can be transported from any innocuous search to the lunatic fringe of YouTube is startling,” wrote NBC News reporter Ben Popken.

He shared an example of how an innocent search for videos about “Saturn” for his child’s science assignment quickly prompted sensationalist recommendations including Nostradamus predictions and pro-Putin propaganda.

“What had started out as a simple search for fun science facts for kindergartners had quickly led to a vast conspiracy ecosystem,” Popken wrote.

One-third of U.S. parents regularly let their children watch their videos on YouTube, according to the 2018 Pew survey. Of the 81 percent who allow their children on the site, 61 percent say their child has encountered “content that they felt was unsuitable for children.”

The issue of algorithmic misdirection certainly does not just affect children — any user can fall victim to its influence.

The allure of videos like the ones Popken was recommended lies in their alarming claims and promises of forbidden truths—often insisting to let you in on “what they don’t want you to know”.

"What we are witnessing is the computational exploitation of a natural human desire: to look ‘behind the curtain,’ to dig deeper into something that engages us," wrote Zeynep Tufekci, a researcher at the University of North Carolina.

Tufekci thinks YouTube profits from introducing its viewers to dangerously addictive content. After all, greater watch time generates more ad inventory, which equates to more revenue.

Prioritising engagement over user experience and well-being “can create alignment towards values and behaviours that are particularly engaging for a small group of people at the cost of others,” Chaslot wrote in a 2016 blog post for Medium.

YouTube’s proprietary protections make it hard to adequately investigate these concerns — thus perpetuating the “black box” problem. It is nearly impossible to reverse-engineer an algorithm without access to information about its inputs and design.

However, analysis of the recommendation algorithm by the Pew Research Center found the system encourages users to watch progressively longer content over time, which also indicates a drive toward higher revenue.

Beyond YouTube’s lack of transparency, problems like this are hard to quantify and regulate because every user’s experience is customised based on their individual preferences and behaviour on the platform.

“No two users watch the same videos, nor do they watch them the same way, and there may be no way to reliably chart a ‘typical’ viewer experience,” wrote members of BuzzFeed New’s Tech + News Working Group. “YouTube’s rationale when deciding what content to show its viewers is frustratingly inscrutable.”

Numerous publications, including The New York Times and BuzzFeed News, have been declined access to details about the functionality of the “Up Next” algorithm, instead being assured by YouTube that the company is working to improve the user experience.

This sort of data would be useful to regulators, academic researchers, fact-checkers and journalists for assessing the type of content the platform is most likely to promote, as well as its effects. In obscuring this information, YouTube is protecting itself from scrutiny.

In an effort to overcome YouTube’s reticence, in 2018 Chaslot created an aptly named resource called AlgoTransparency, which simulates the behaviour of a YouTube user—albeit one without a viewing history—to capture generic, rather than personalised, recommendations. It’s meant to simulate the “rabbit hole” users might descend into when surfing the site.

The program discovers video through YouTube search engine, then follows a chain of recommended titles that appear “up next”, repeating the process thousands of times to assemble layers of data about the videos being promoted by the algorithm.

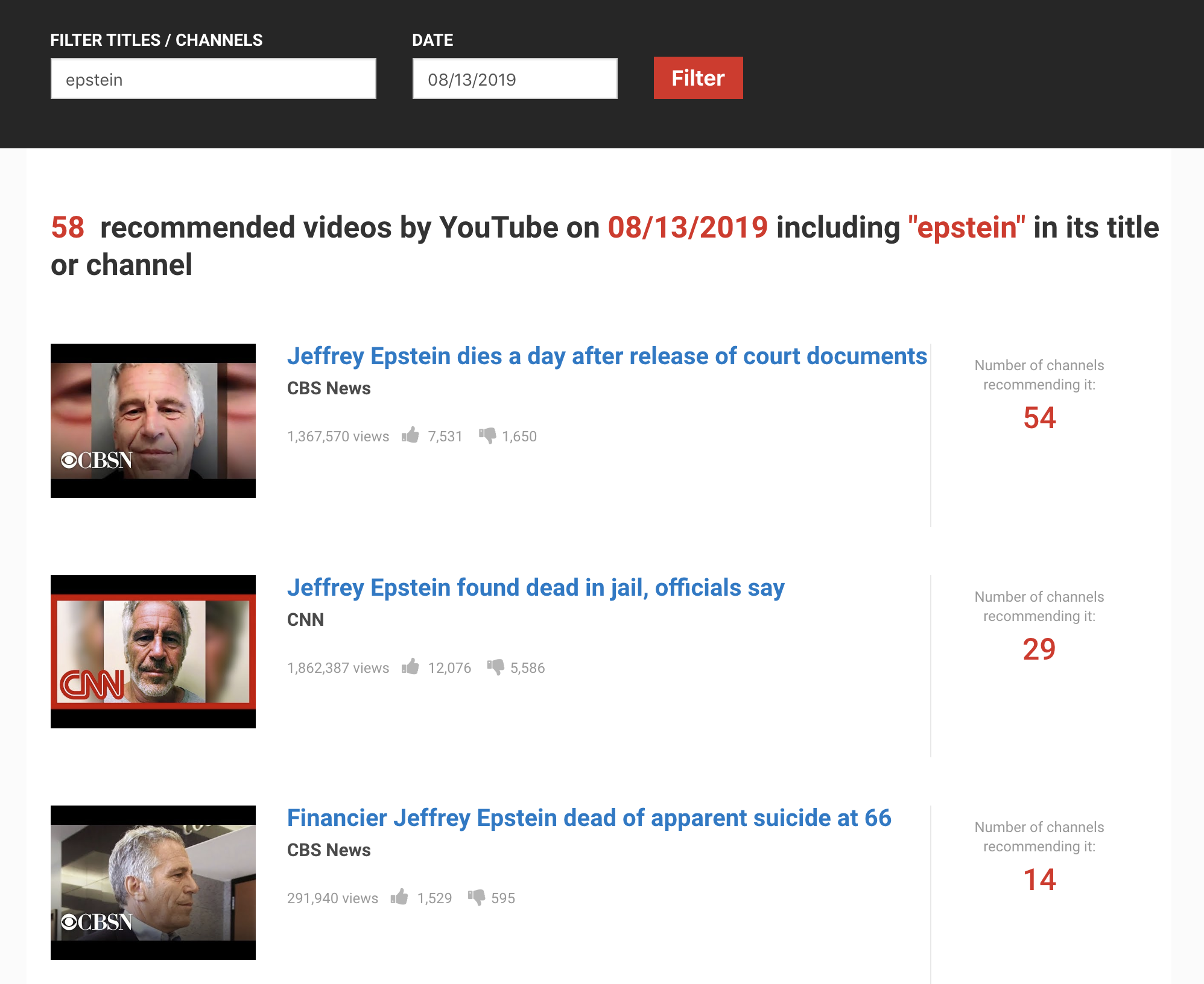

For instance, a search for “Epstein” on 13 August 2019 —three days after the American financier and convicted sex offender died in prison—returned 57 relevant videos that were frequently recommended on YouTube that day. (One video was about a different person with the same last name.)

Thirty-six videos, or 63 percent, came from mainstream news sources like CBS, CNN, Fox News and MSNBC. Several were posted by individual content creators, including Ben Shapiro and Tim Pool, who were identified as members of the “Alternative Influence Network” in a 2018 Data & Society report.

Six videos directly included the word ‘conspiracy’ in the title. Others, like a video by “Political Vigilante” podcast host Graham Elwood, featured headings like “Ruling Class Pedophiles Have Epstein Murdered in Jail”.

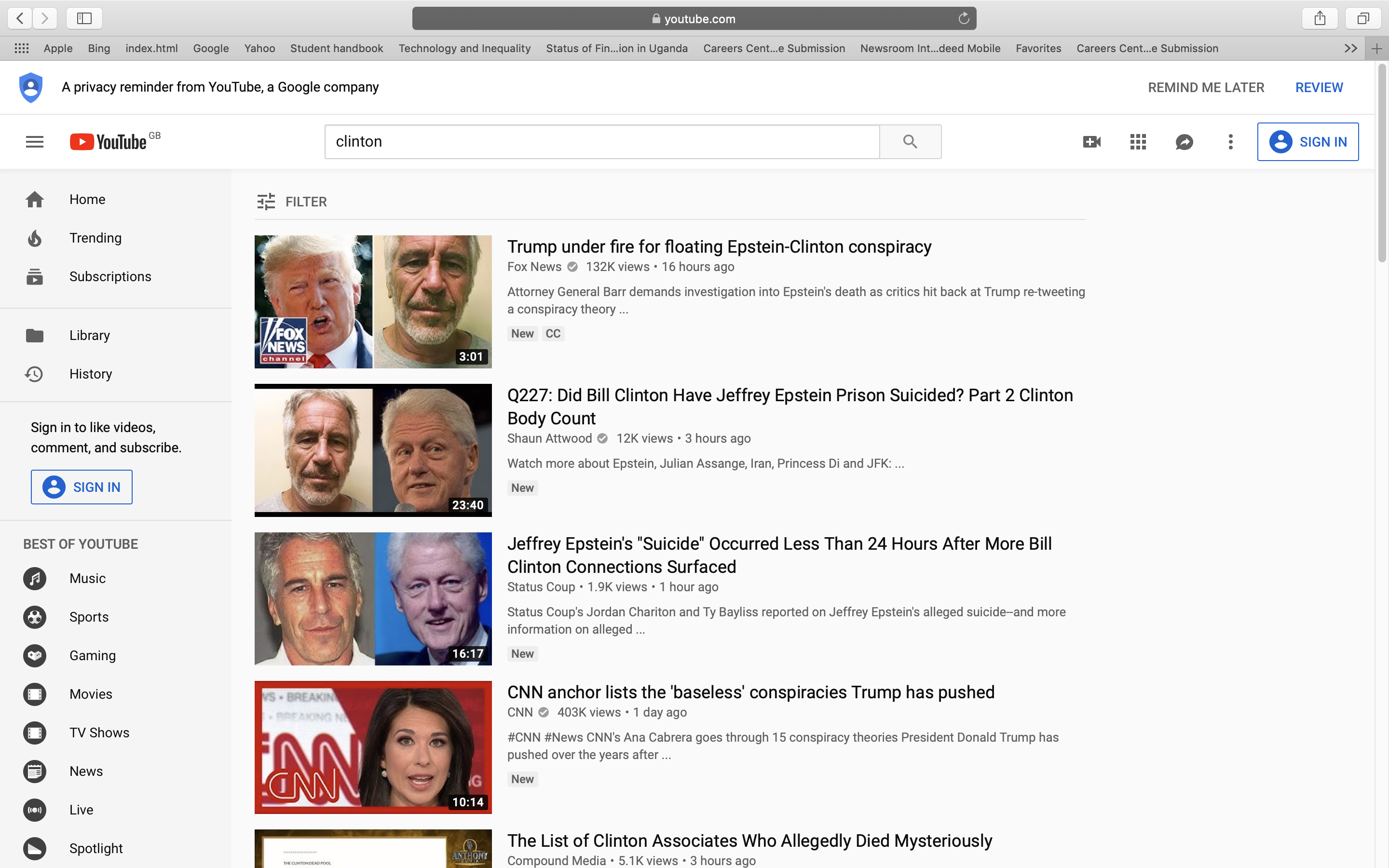

After President Donald Trump retweeted a conspiracy theory alleging Bill Clinton had a hand in Epstein’s death, a YouTube search for “Clinton” also returned a mix of mainstream news and conspiracy content.

A YouTube search for Clinton the same day also returned a mix of mainstream news and conspiracy content. (Search results captured on cleared, cookie-less browser on 13 August 2019.)

The searches illustrated the kind of content users searching on YouTube for updates about a major ongoing news story were likely to be directed to: a mix of authoritative reporting, punditry and conspiracy theorising.

Various news organisations have attempted their own investigations of YouTube’s algorithm, some in collaboration with Chaslot.

The Guardian used AlgoTransparency to conduct a content analysis assessing the top 500 videos recommended following searches for “Trump” and “Clinton”, respectively, in the months leading up to the 2016 election. Upon watching all the videos and analysing their titles, two-thirds were found to be obviously partisan—as opposed to politically neutral or insufficiently biased. Of those, 84 percent were favourable to Trump, with a significant portion of the videos devoted to criticising Hilary Clinton through conspiracy videos or “fake news”. The channels whose videos were recommended most often included Alex Jones, Fox News, The Young Turks and MSNBC.

The data indicated partisan videos recommended by YouTube were approximately six times more likely to favour Trump’s presidential campaign over Clinton’s.

John Kelly, the executive director of Graphika—a commercial analytics firm that tracks political disinformation campaigns—told the Guardian the data indicated “an unmistakable pattern of coordinated social media amplification.”

Graphika found more than 513,000 Twitter accounts had tweeted links to at least one of the YouTube-recommended videos in the database in the six months leading up to the election. More than 36,000 accounts tweeted at least one of the videos 10 or more times.

“Over the months leading up to the election, these videos were clearly boosted by a vigorous, sustained social media campaign involving thousands of accounts controlled by political operatives, including a large number of bots,” said John Kelly, Graphika’s executive director. “The most numerous and best-connected of these were Twitter accounts supporting President Trump’s campaign, but a very active minority included accounts focused on conspiracy theories, support for WikiLeaks, and official Russian outlets and alleged disinformation sources.”

Pro-Clinton videos, on the other hand, were promoted by a much smaller network of accounts that now identify as a “resist” movement.

YouTube took issue with the conclusions of the Guardian’s analysis, saying the sample of videos evaluated does not capture a complete, and therefore accurate, picture of the type of content that was being recommended on the platform in the year before the election.

“Our search and recommendation systems reflect what people search for, the number of videos available, and the videos people choose to watch on YouTube,” a spokesperson told the Guardian. “That’s not a bias towards any particular candidate; that is a reflection of viewer interest.”

But is this responsible? Prioritising engaging, but potentially deceitful, content over access to authoritative information is not only detrimental to the user experience but also contributes to the decay of truth.

“[J]ust because people are willing to watch something doesn’t mean they’re enjoying it,” Popken wrote. “YouTube has to balance protecting its profits with the trust of its users.”

In the aftermath of widespread criticism that followed the 2016 U.S. presidential election, YouTube has highlighted its efforts to improve how the site handles news-related queries and recommendations. This has included the introduction of a “Breaking News” shelf on the homepage, as well as a “Top News” shelf that appears alongside news-related search results. The company says it has also taken a tougher stance on videos that walk the line but do not explicitly violate policies regarding inflammatory religious or supremacist content.

However, a 2018 Wall Street Journal investigation found evidence of extremist tendencies with a wide variety of YouTube content. Their research revealed the recommendation algorithm’s potential bias toward incendiary content.

“When users show a political bias in what they choose to view, YouTube typically recommends videos that echo those biases, often with more-extreme viewpoints."

The Journal found YouTube’s recommendations “often lead users to channels that feature conspiracy theories, partisan viewpoints and misleading videos, even when those users haven’t shown interest in such content... despite changes the site has recently made to highlight more-neutral fare.”

“When users show a political bias in what they choose to view, YouTube typically recommends videos that echo those biases, often with more-extreme viewpoints,” Jack Nicas wrote.

According to an investigation by BuzzFeed News from January of this year, it took nine clicks to get from an innocuous PBS clip about the 116th US Congress to a 2011 anti-immigrant video from an organisation called The Center for Immigration, which was classified as a hate group by the Southern Poverty Law Center in 2016.

The video was not accompanied by a panel linking to contextual info about the channel, despite YouTube having had a partnership with the SPLC since 2018.

The video was recommended alongside content from both authoritative sources and channels like PragerU, an unaccredited right-wing online university.

The research suggests “YouTube users who turn to the platform for news and information… aren’t well served by its haphazard recommendation algorithm, which seems to be driven by an id that demands engagement above all else.”

Researchers and regulators are not the only parties concerned with trying to understand the algorithm. Content creators who rely on advertising revenue from their videos are also significantly impacted by the workings of and changes to the system.

“I think it’s a really important part of the job for content creators because they don’t really know how it works but it’s really important for their income,” Glatt said. “...So they have to talk about how they think it works and give each other advice about how to use it or how to game it.”

Because YouTubers’ livelihoods are beholden to a system they don’t understand, they often resort to guessing and talking about them in the abstract — even at industry events like VidCon.

“It’s not about how the algorithm works, it’s about how people think it works, and how they act in response to it,” Glatt said. “How do [creators] imagine that it works and how do they act in response?... I think the creators I’ve spoken to anthropomorphise the algorithms.”

YouTube’s recommendation algorithm shapes the experiences of billions of users, drives trends, and has the potential to make or break the careers of creators. Given the scope of its influence, the company’s decision-making can have a powerful effect on curbing—or exacerbating—the spread of disinformation.

“We can’t just think of the algorithm as being this thing that’s untethered from social and political forces."

However, it is important to also recognise YouTube’s problems are symptomatic of larger societal issues.

“We can’t just think of the algorithm as being this thing that’s untethered from social and political forces,” said Becca Lewis, a PhD researcher at Stanford University who authored the Data & Society report about the Alternative Influence Network. “The content creators on YouTube are creating content and marketing that content with an eye to the recommendation algorithm and trying to manipulate it. Sometimes the radicalisation can happen without the help of the algorithm at all. The algorithm itself was the result of political, business, social choices made within YouTube the company.”

Nonetheless, many regulators and researchers believe YouTube should be more intentional about mitigating the harmful effects of its algorithm, such as by integreating more human decision-making.

“Small differences in the algorithms can yield large differences in the results,” Chaslot wrote.

READ MORE:

How YouTube incentivises the creation and spread of conspiracy content

"One of the most powerful radicalising instruments of the 21st century"